Artificial intelligence and machine learning rely on powerful frameworks to build and deploy models efficiently. Among these, PyTorch has gained widespread popularity for its flexibility, ease of use, and dynamic computation graph. Developed by Facebook’s AI Research lab, PyTorch simplifies deep learning development, making it a preferred choice for researchers and developers.

Unlike traditional frameworks, its dynamic nature supports real-time modifications, enabling smooth experimentation. With powerful GPU acceleration and an expanding community, PyTorch drives innovation in areas such as natural language processing, computer vision, and healthcare. This article delves into what PyTorch is, how it works, and why it's a game-changer in AI.

Understanding PyTorch

At its core, PyTorch is an open-source machine learning framework primarily used for deep learning. Developed by Facebook's AI Research lab, it was introduced to simplify, expand, and optimize the process of building machine learning models. Unlike some other frameworks that emphasize rigid structures and are focused on deployment, PyTorch has been designed to prioritize research and experimentation, making it a go-to tool for AI researchers.

PyTorch is based on a deep learning library that can be used effectively with both Python and C++, giving flexibility in constructing complex models. Its major features are dynamic computation graphs, simple-to-use APIs, and a strong foundation for GPU acceleration, all of which make it extremely versatile. Essentially, PyTorch offers a powerful but simple-to-understand platform for constructing neural networks.

How PyTorch Works?

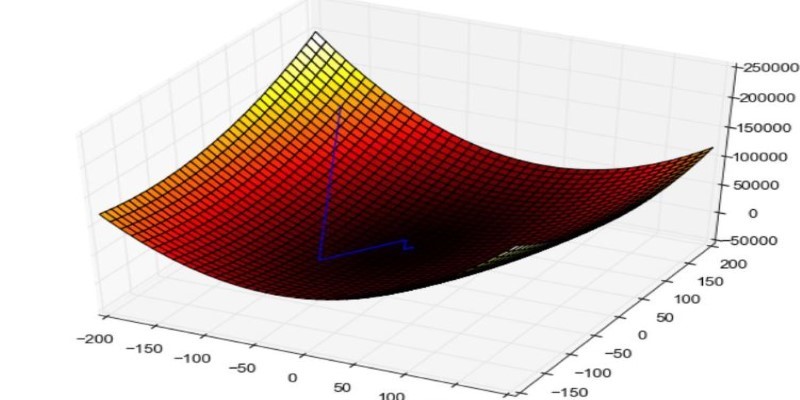

Knowing the mechanism of PyTorch can let developers maximize its usage. Another striking aspect of PyTorch is that it adopts dynamic computation graphs. Traditional deep learning environments, such as TensorFlow, formulate and solidify the computational graph ahead of time during model training, so the developer cannot quickly adjust the model's architecture at run time. PyTorch, however, employs dynamic graphs, making changes possible at run time. Such a characteristic has also been dubbed as "define-by-run."

With PyTorch, when you perform operations on tensors (multi-dimensional arrays), the framework automatically builds the computational graph on the fly. This provides significant flexibility, especially when experimenting with different architectures or debugging models. It’s easier to modify, track, and debug, which makes the iterative process of research and development smoother and faster.

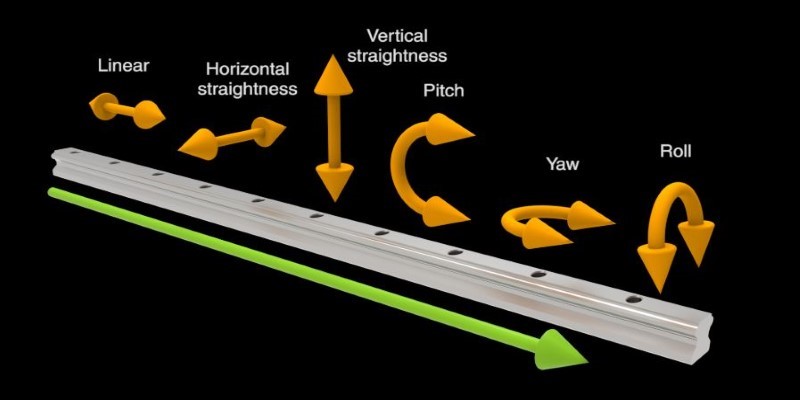

Another key aspect of PyTorch is its integration with GPU acceleration. Deep learning tasks often require massive computational resources, especially for training large neural networks on large datasets. PyTorch enables seamless integration with NVIDIA GPUs, which accelerates training times and allows researchers to handle more complex models. This makes it particularly useful in real-world applications where speed and scale matter, such as image recognition, natural language processing (NLP), and more.

Applications of PyTorch

PyTorch’s flexibility makes it an ideal choice for a wide range of applications in artificial intelligence. From natural language processing to computer vision, PyTorch is being used to create state-of-the-art AI models across many fields. Let’s explore a few areas where PyTorch is making a significant impact:

Natural Language Processing (NLP)

PyTorch is widely used for NLP tasks and powering models like BERT and GPT. Its deep integration with Hugging Face's Transformers makes training and deploying language models easier. PyTorch supports sentiment analysis, text classification, and machine translation, offering flexibility and efficiency for researchers and developers working on NLP applications.

Computer Vision

PyTorch powers computer vision tasks like image classification, object detection, and facial recognition. With TorchVision, developers build complex models efficiently. Its GPU acceleration enables large-scale data processing, making it essential for applications in autonomous driving, medical imaging, and security. PyTorch's flexibility and performance make it a preferred choice for advancing AI-driven visual recognition technologies.

Reinforcement Learning

PyTorch is essential for reinforcement learning, where AI agents learn via trial and error. Its dynamic computation graphs enable real-time model updates, making it ideal for robotics, gaming, and automated decision-making. Researchers leverage PyTorch for policy optimization, deep Q-networks, and training AI to handle complex tasks autonomously, driving advancements in intelligent, adaptive systems.

Healthcare

PyTorch revolutionizes healthcare AI, supporting medical imaging, diagnostics, and drug discovery. It helps train models for disease detection, MRI analysis, and drug interaction prediction. Its scalability and deep learning capabilities accelerate medical research, enhance patient care, and drive innovation in healthcare technology. PyTorch's impact extends from clinical applications to groundbreaking advancements in medical AI.

Why Choose PyTorch?

Given the options available, it’s understandable why many developers and researchers turn to PyTorch. The framework’s popularity can be attributed to a variety of factors, including:

Ease of Use – PyTorch’s Pythonic syntax and dynamic graph architecture make it simple to write and debug code. Even beginners in machine learning can quickly grasp how to use the framework.

Flexibility—PyTorch is highly flexible and can be used in both research and production environments. It allows users to experiment freely with different model architectures while maintaining scalability when needed.

Strong Community Support—PyTorch has a growing, vibrant community of developers and researchers. Tutorials, documentation, and forums make it easier for newcomers to get started and find solutions to problems.

Great Integration with Other Tools—PyTorch integrates seamlessly with various tools, including sci-kit and OpenCV, enabling smooth compatibility with deep learning frameworks, enhancing flexibility, and streamlining machine learning workflows efficiently.

Conclusion

PyTorch has become a dominant framework in machine learning, offering flexibility, dynamic computation graphs, and seamless GPU acceleration. Its user-friendly nature makes it a top choice for researchers and developers working on AI innovations. From natural language processing to computer vision and healthcare, PyTorch powers cutting-edge applications, enabling efficient model training and deployment. Its strong integration with various tools and libraries further enhances its appeal. Whether you're new to AI or an experienced researcher, PyTorch provides a robust and adaptable platform for building deep learning models. As AI continues to evolve, PyTorch remains a vital tool for driving future advancements in the field.